I’m starting off this series talking about Object Oriented Programming. It’s amazing how many people write C# or VB (.NET) with long, procedural methods with really no understanding of the true fundamentals of OO design.

I know, I know – it’s not you, and know what? I’m not talking about you.. but check out the code from the person next to you. See what I mean?

Just because you’re writing in an OO language, doesn’t mean that you’re writing OO code, or taking advantage of the Object Oriented benefits. An Object Oriented language is more than classes with methods, properties and events (even VB 6 had that!).

Learn the Fundamentals.

Get to know Interfaces, abstract classes, structs. I’m not picking on the thousands and thousands of extremely talented .NET developers that came from VB6 (I’m one of them), but I am acknowledging the fact the there are OO tools and strategies at your disposal now that never were before. Dig in to the delegates and the various eventing models, chained constructors, virtual and overridden methods. Go beyond if statements, while loops and language syntax similarities and embrace your new polymorphic self.

Perhaps, you’ve been a very successful developer for a very long time, I’m not saying that you have to change how you write all of your code. I am however, suggesting that you should realize that you have more tools in your toolbox than you might be aware of, and that if you learn how to use those tools, you will be more successful using the right tool for the right job.

Go beyond the fundamentals. A friend of mine teaches Object Oriented Analysis and Design for SMU, he once told me that if you have to use an if statement in your code then you’re not doing OO – it’s now procedural. I think he meant his statement in jest, (Although, he actually doesn’t allow his students to use if statements past the third section in his course) but the point was well taken. Beyond language semantics lie’s a whole world of patterns, approaches, and ways of doing OO that can make your life much, much simpler.

Pragmatic – Make new friends, but keep the old ones..

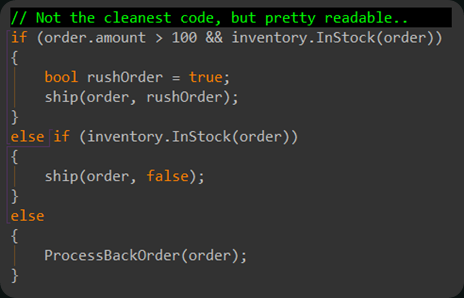

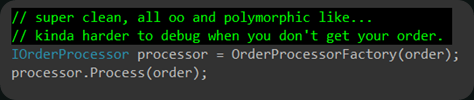

I’m not that much of an OO purist. I actually do feel that sometimes a well laid our “procedural” method can be easier to read, and communicate the intent of the code, but it’s a balancing act. Every time you write an if/switch statement you fork the flow of your code and increase it’s complexity, reducing your ability to maintain and test it. An OO purest might create a polymorphic object at this point and hop over to it instead of writing that if statement. I’m not saying that one way is better than the other. Like I said, writing readable/maintainable/testable code is a balancing act.

Beware of being “too” OO. It’s easy to get too pure on your OO. I’ve worked on systems where I had to fight through 7 to 8 levels of inheritance debugging and fighting all the way to figure out what in the world the system was doing. I’ve seen developers take 30 lines of procedural code and convert them in to 60 or 70 unique classes spread out over 6 projects. You can have too much of a good thing!

Is it OK to be “procedural” sometimes… can you be too OO?

The truth is, you want to use the right tool for the right job. The code on top is probably pretty readable and is fine… *right now* it won’t be too long and you’re going to want to start moving it closer to something like on the bottom. Why? With every scenario, and rule that gets applied to shipping orders, this code becomes less readable and harder to test. It won’t be long until you’re going to want to start validating (unit testing) your various order processes independent of each other. You’re going to want to break apart the coordination of an order, determination (the factory) of an order, from how it is actually handled… as you do that, your code will start to become more SOLID… but we’ll save that for the next post.

Interview Question Alert

Some of the related questions that I often ask:

Q:What’s the difference between an Interface and an Abstract class? When would you use one over the other? What implications does that have?

A: Interfaces define the contract with zero implementation, Abstract classes can have some implementation, but for the most part don’t. You can inherit from one base class, but implement as many Interfaces as you’d like to. Abstract classes can be versioned (if you’re going to strongly name them) but Interfaces cannot be versioned. Bonus Answer: Whenever you create an abstract class, you should always have an Interface to go with it, then code your dependencies to the Interface, not the abstract class.

Q: “Polymorphic” is a big OO word that developers like to throw around. Give a practical example of when you’ve used polymorphic behaviors in your code.

A: Anytime you use an abstract factory you’re implementing polymorphic principals. So the “processor.Process()” method above would be an example of Polymorphism, so would most Interface based development.

Further Reading

I hope this post has inspired you at some level to keep honing your development skills. I know that it was heavier on the “why” and low on the “how” specifics… I think that’s part of the point. You’ve got to go tackle some of this yourself. So go on, get out there.

Wikipedia – http://en.wikipedia.org/wiki/Object_oriented

Book: Object Thinking – MS Press

What are the resources that have helped you the most in your software development?

Photo Credit: http://www.flickr.com/photos/wwworks/sets/72157594328095699/

I don’t see the need to define an interface for every abstract class. What is your reasoning behind this?

Hi David –

I'm caution of ever saying always and never… so there certainly *could* be times that an abstract class by itself is fine. That being said… If I only use an abstract class than I am locking myself into that implementation. One of the reasons to do an abstract class if if you want to unify some behavior, (or provide default behaviors) but then force the developer (utilizing your abstract class) to provide his/her own implementations on other methods.

For the most part, that's fine, but it can break down when a developer wants to completely swap in their own implementation. The best example of that is in Unit testing and a Mock implementation would make more sense.

As a general rule, I say use an abstract if you must, but code against the interface.

Hi Caleb,

Thank you for replying to my query. I agree that there are virtually always exceptions to every rule so I too try to keep away from saying “always” and “never”.

Locking ourselves into an implementation generally comes from extending a concrete class. If the concrete class does not do what we want or if we want to vary something that is not varying then we are stuck. This is why we use abstract classes and (Java/C#) interfaces. If we have a pure virtual method defined either in an abstract class or as part of an interface then we can add a subclass (or implement the interface) to handle that new variation. Most developers I know use one or the other.

When GoF advised us to “Design to Interfaces” they were not speaking of Java/C# interfaces because they published their book before James Gosling defined the keyword “interface” in his language. What GoF meant was not to code to implementations. There are many ways to do; the most obvious is through defining method signatures that hide implementation.

Generally, I don’t think it buys much to implement a Java/C# interface for every abstract class we define. Remember, you can override any non-final instance method in Java or any virtual method in C#.

One exception, as you point out, is if I want to insert a mock using dependency injection and the constructor of the object you are mocking does some initialization that you want to avoid. Another situation is if you have divergent hierarchies that need to come together again. A third situation is to implement a technique I call cross-casting to avoid breaking type encapsulation. Examples to illustrate these techniques are more involved than I can put into this comment but suffice to say that I can’t really think of many other times when I’d want to have an abstract class implement an interface.

I’ve written about some of this in my blog, if you’d like to know more. I’ll try to blog more on it over the next few weeks. I hope this is helpful.

Thank you,

David.

Hi David,

You are absolutely right that if an Abstract Class is done right then there is much less of a need for an Interface. The place where most C# devs shoot themselves in the foot is that C# methods aren't virtual by default, so the default approach in C# is that classes are less extensible while in Java extensibility is the default.

The other place where abstract classes are frustrating is when you have dependencies in the constructor (which you mentioned). I prefer constructor injection for required dependencies, and that can make mocking and extending an abstract class more problematic than a straight forward Interface.

I dug a little more in to this in my SOLID post: http://developingux.com/2010/02/09/solid-develo…

Thanks for the comments David – I'm looking forward to reading your blog posts on the subject.

Hey Caleb,

When I teach the OOP concepts in my .NET courses, I also prohibit the use of an “if” statement to establish the concept of polymorphism. Of course, using an “if” is required in many scenarios that do not make the code procedural. But a seasoned developer is one that knows when to use an “if” and when to create a reusable code pattern that allows for multiple behaviors based on context.

Excellent Michael! Yep – that's how a friend of mine teaches OO at SMU.

great insight! “a seasoned developer is one that knows when to use an “if” and when to create a reusable code pattern”

MBT is a highly effective functional product. New Arrivals of MBT Shoes , Discover the benefits of MBT Barabara .